Tree Trunk Detection of Eastern Red Cedar in Rangeland Environment with Deep Learning Technique

doi: 10.5552/crojfe.2023.2012

volume: 44, issue:

pp: 11

- Author(s):

-

- Badgujar Chetan

- Flippo Daniel

- Gunturu Sujith

- Baldwin Carolyn

- Article category:

- Original scientific paper

- Keywords:

- autonomous ground vehicle, eastern red cedar, invasive species, object detection, rangeland management, transfer learning, YOLO

Abstract

HTML

Uncontrolled spread of eastern red cedar invades the United States Great Plains prairie ecosystems and lowers biodiversity across native grasslands. The eastern red cedar (ERC) infestations cause significant challenges for ranchers and landowners, including the high costs of removing mature red cedars, reduced livestock forage feed, and reduced revenue from hunting leases. Therefore, a fleet of autonomous ground vehicles (AGV) is proposed to address the ERC infestation. However, detecting the target tree or trunk in a rangeland environment is critical in automating an ERC cutting operation. A tree trunk detection method was developed in this study for ERC trees trained in natural rangeland environments using a deep learning-based YOLOv5 model. An action camera acquired RGB images in a natural rangeland environment. A transfer learning method was adopted, and the YOLOv5 was trained to detect the varying size of the ERC tree trunk. A trained model precision, recall, and average precision were 87.8%, 84.3%, and 88.9%. The model accurately predicted the varying tree trunk sizes and differentiated between trunk and branches. This study demonstrated the potential for using pretrained deep learning models for tree trunk detection with RGB images. The developed machine vision system could be effectively integrated with a fleet of AGVs for ERC cutting. The proposed ERC tree trunk detection models would serve as a fundamental element for the AGV fleet, which would assist in effective rangeland management to maintain the ecological balance of grassland systems.

Tree Trunk Detection of Eastern Red Cedar in Rangeland Environment with Deep Learning Technique

Chetan Badgujar, Daniel Flippo, Sujith Gunturu, Carolyn Baldwin

Abstract

Uncontrolled spread of eastern red cedar invades the United States Great Plains prairie ecosystems and lowers biodiversity across native grasslands. The eastern red cedar (ERC) infestations cause significant challenges for ranchers and landowners, including the high costs of removing mature red cedars, reduced livestock forage feed, and reduced revenue from hunting leases. Therefore, a fleet of autonomous ground vehicles (AGV) is proposed to address the ERC infestation. However, detecting the target tree or trunk in a rangeland environment is critical in automating an ERC cutting operation. A tree trunk detection method was developed in this study for ERC trees trained in natural rangeland environments using a deep learning-based YOLOv5 model. An action camera acquired RGB images in a natural rangeland environment. A transfer learning method was adopted, and the YOLOv5 was trained to detect the varying size of the ERC tree trunk. A trained model precision, recall, and average precision were 87.8%, 84.3%, and 88.9%. The model accurately predicted the varying tree trunk sizes and differentiated between trunk and branches. This study demonstrated the potential for using pretrained deep learning models for tree trunk detection with RGB images. The developed machine vision system could be effectively integrated with a fleet of AGVs for ERC cutting. The proposed ERC tree trunk detection models would serve as a fundamental element for the AGV fleet, which would assist in effective rangeland management to maintain the ecological balance of grassland systems.

Keywords: autonomous ground vehicle, eastern red cedar, invasive species, object detection, rangeland management, transfer learning, YOLO

1. Introduction

An invasion of eastern red cedar, popularly known as »Juniperus virginiana L.« on rangelands, grasslands, and prairies of North America has become a severe threat to ecosystem functioning (Knapp et al. 2008, Van 2009, Ratajczak et al. 2012, Anadon et al. 2014, Archer et al. 2017, Wang et al. 2018). The scale of invasion is significant (Wang et al. 2018) and continuing (Meneguzzo and Liknes 2015, Symstad and Leis 2017), with annual additions of new eastern red cedar (ERC) forests of approximately 25,000 acres in Nebraska (Nebraska Forest Service 2016), 300,000 acres in Oklahoma (Drake and Todd 2002), and a 23,000 percent increase by volume in Kansas in the last 45 years (Atchison et al. 2015). Similar increases in ERC acreage have been recorded in Missouri grasslands (Harr et al. 2014), and invasive junipers are present throughout the Great Plains ecoregion (Natural Resources Conservation Service 2010). The impact of the redcedar invasion on Great Plains grasslands has been likened to the 1930s Dust Bowl environmental crisis, identified as the most dominant ecological change occurring on rangelands (Engle et al. 2008, Twidwell et al. 2013a) and described as a »green glacier« overtaking the prairies (Bragg and Hulbert 1976, Gehring and Bragg 1992, Briggs et al. 2002a, Briggs et al. 2002b, Engle et al. 2008). As rangelands change from grassland to dense ERC forests, there are numerous negative consequences, including reduced streamflow (Zou et al. 2018), reduced grassland continuity (Coppedge et al. 2001b), reduced grassland bird and mammal abundance and richness (Coppedge et al. 2001a, Horncastle et al. 2005, Knapp et al. 2008), poorer wildlife nutritional condition (Symstad and Leis 2017) and increased populations of disease-vector ticks (Noden and Dubie 2017). These consequences are concerning. ERC infestations cause significant challenges for ranchers and landowners, including prohibitively high costs for removing mature red cedars (Engle 1996, Taylor 2008, Natural Resources Conservation Service 2014), difficulty in handling livestock (Knezevic et al. 2005), and reduced revenue from hunting leases (Coffey 2011, Simonsen et al. 2015). The largest single economic impact is the loss of forage under ERC canopies, resulting in decreased livestock production (Engle 1996, Briggs et al. 2002a). The ERC infestation has resulted in significant economic losses. For example, since 2000, the state of Nebraska has lost nearly half a million acres of grazing land with an estimated economic impact of $18.7 million (University of Nebraska Lincoln 2022). ERC plays a key role in Great Plains wildfires because its branches are often low to the ground, readily burn due to their volatile oil content and act as ladder fuels to carry a ground fire up into the tree canopy (Oklahoma Forestry Service 2014). ERC presence in grasslands can intensify wildfires to the point that they are uncontrollable by ground-based firefighters (Twidwell et al. 2013b, Morrison 2016).

The primary options for ERC control are prescribed burning and mechanical tree removal. The prescribed burning method is low cost (Briggs et al. 2002a, Bidwell et al. 2017) and also offers other ecosystem benefits (Fuhlendorf et al. 2011). However, some ranchers and landowners cannot or prefer not to burn because of safety concerns (Harr et al. 2014, Starr et al. 2019), or there is insufficient fuel load available on ERC to carry a prescribed burn (Engle 1996, Twidwell et al. 2009). The advantages of mechanical control include a 100% mortality rate for each tree cut (Coffey 2011) and the ability to kill mature trees that might escape a prescribed burn (Engle 1996, Ansley and Rasmussen 2005). In mechanical control, trees are cut manually with a chainsaw or with various forestry machines. The primary challenge of using mechanical control is the high cost, ranging from $36/acre to $1001/acre (Coffey 2011, Baker et al. 2017). While mechanical control costs depend on numerous factors, labor can be the most expensive component (Ortmann et al. 1998). Operator safety is another concern due to dangerous maneuvering equipment, especially on steep slopes (Mitchell and Klepac 2014, Simonsen et al. 2015).

Current manual labor costs are too high to utilize mechanical control methods in combating invasive ERCs on American rangelands. Therefore, it is imperative to seek ways to use technology to address the grand challenge of rangeland conservation. Autonomous robotics are well-positioned to reduce costs as they decrease labor and risk. A fleet of autonomous robots could be employed to address several of the challenges associated with the mechanical control of ERC. A semi-autonomous robotic platform was to cut the ERC, and the need for the autonomous operation was identified (Badgujar et al. 2022). However, detecting the target tree or trunk in a rangeland environment is critical in automating an ERC cutting operation. The success of the machine vision component, which aims to locate the individual tree trunk, becomes imperative to autonomous robots for ERC cutting operations.

Identifying the specific target (crop, flower, fruits, weed, vegetation) in a dynamic and variable environment with a vision-based robotic system is always a challenging and daunting task. However, in recent years, deep learning (DL) methods have gained significant momentum (LeCun et al. 2015) and achieved impressive results on machine vision problems, including image classification, segmentation, and object detection, compared to conventional algorithms, which include image processing. Particularly in agriculture, DL has been used in weed-plant classification (Dyrmann et al. 2016, Utstumo et al. 2018), plant identification (Lee et al. 2015, Grinblat et al. 2016), plant disease classification (Hall et al. 2015, Sladojevic et al. 2016, Amara et al. 2017), fruit detection (Sa et al. 2016, Bargoti and Underwood 2017, Liu et al. 2019, Zhang et al. 2021), fruit counting (Chen et al. 2017), obstacle detection (Christiansen et al. 2016), and apple trunk-branch segmentation (Majeed et al. 2020, Zhang et al. 2021). The DL model accuracy, precision, speed robustness, and ability to deploy on a tiny microcontroller make them perfect for mobile robots and automation.

Recently, DL-based object detection frameworks have evolved remarkably due to their ability to accurately detect the objects with their coordinates in images and place the bounding boxes on coordinates. This combines two tasks, image classification and object detection, into one framework or task. Several researchers have extensively studied fruit detection (an essential component in autonomous harvester), including apple and tree branches detection with R-CNN (Zhang et al. 2018), MangoNet convolutional neural network (CNN) based architect for mango detection (Kestur et al. 2019), detecting the apples in multiple growing seasons with YOLO-v3 (Tian et al. 2019), SSD network for real-time on-tree Mango detection (Liang et al. 2018), apple branches and trunk segmentation and detection with SegNet (Majeed et al. 2020) and multiple CNN architectures (Zhang et al. 2021), Faster R-CNN to detect apples, branches and leaf (Gao et al. 2020). Fruit detection studies reported more than 84% higher fruit detection rates (Gao et al. 2020). However, there is a limited information available on tree trunk detection with deep learning techniques.

The real-time detection and localization of ERC trees or trunk in a rangeland environment is the essential step toward developing an autonomous robotic tree cutting system. Therefore, the primary goal of this study is to investigate a deep learning-based object detection technique for detecting the ERC tree trunk in natural environments. The first goal of this study is to collect the ERC tree trunk image in a natural field environment. The second goal is to train a deep learning-based ERC tree trunk detection model. The outlined study would contribute toward generating the fundamental knowledge for the development of an automated robotic harvester system for ERC trees. Moreover, the combination of real-time object detection with a Global Positioning System (GPS) would also assist robots in navigation in a dense forest environment.

2. Materials and Methods

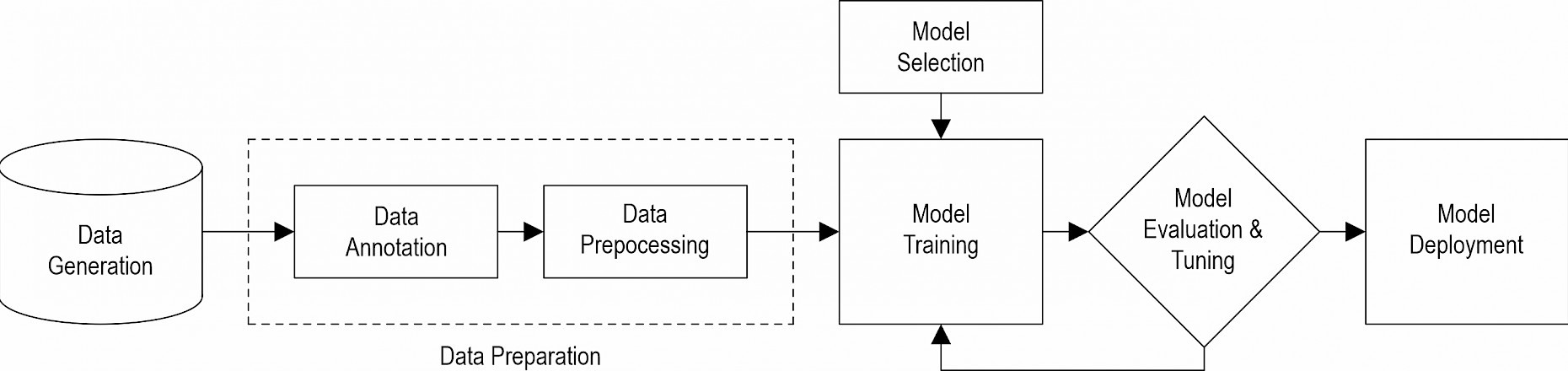

A supervised deep learning approach was implemented in this study, where a labeled dataset (images) was used to train a model that predicts the target outcomes. DL-based model development involved various steps, and the model development workflow is presented in Fig. 1.

Fig. 1 Workflow of supervised deep learning model development

2.1 Image Acquisition

The images of the ERC tree trunk were acquired using a compact action camera (GoPro HERO4 Black, GoPro, San Mateo, CA, USA). The camera was mounted on the chassis of the semi-automatic KSU-Navigator robot, controlled by a radio transmitter-receiver. The images and videos were captured in a real field environment, i.e. on a bright afternoon (between 12:00–13:00) in August at Garzio Conservation Easement, Fairview, Kansas, USA. More than 1600 sRGB images with a resolution of 2560×1920 were acquired under natural illumination conditions. The image resolution influenced the performance of the DL model. Hence, the images were captured with the highest resolution possible with the camera hardware. These high-resolution images can be easily resized according to model requirements during the data-processing step.

2.2 Data Annotation

Image annotation makes the objects recognizable to visual perception-based DL models. An open-source graphical annotation tool known as »LabelImg« was employed to annotate the input images. A single object - the tree trunk, was manually identified, a bounding box was drawn, and a label was assigned for each object in the image. Tree trunk with insufficient or unclear pixel area and occluded objects either by vegetation or another trunk were left unlabelled. The annotations were saved as text files in YOLO format. A total of 840 images were manually labeled with 4066 annotations/label, averaging at 4.8 annotations per image across a single class.

2.3 Data Preprocessing

The annotated images were processed with the online platform »roboflow«; extensively used for raw image preprocessing to computer vision models. All images were preprocessed and further divided into the train (70%), validation (20%), and test set (10%) subset. The images were auto-oriented to discard EXIF rotations and standardize the pixel ordering. Resizing the images was a common data preprocessing step in DL model training because the larger input images take longer to train the model and vice-versa. Therefore, the source image (2560×1920 dimension) was resized to square (512×512 dimensions) with white padded edges, keeping the original data for faster training. Moreover, an adaptive histogram equalization algorithm was applied to enhance an image with low contrast.

2.4 Model selection

Numerous DL architectures or models were available for object detection tasks in both open-source and research domains. There were two types of object detectors:

One-stage detector that considered object detection as a regression, which computed and predicted class probabilities and object coordinates to draw the label box. Examples of one-stage detectors were You Only Look Once (YOLO) and Single Shot Detection (SSD)

Two-stage detector that divided the object detection task into two-stage. In the first stage, Region Proposal Networks (RPN) were used to extract target regions in the image. The second stage solved the regression to locate label coordinates and performed the object classification with an associated probability.

The examples were Faster R-CNN and Mask R-CNN. The two-stage detectors were highly accurate because they prioritize accuracy; however, the higher computation process made them relatively slower. Contrasting, one-stage detectors were faster because they prioritized the inference speed but gave a lower accuracy.

Inference speed, accuracy, and robustness were important metrics for implementing an object detection model. Each model type included a trade-off in speed, accuracy, and robustness. In this study, the model was supposed to be fast and accurate to perform in real-time, robust enough to adapt to the real-world environment, and compact to run on a single-board microcontroller. Keeping all this in view, the pretrained YOLO architectures were selected.

The YOLO was one of the most acceptable object detection frameworks with state-of-the-art performance. It combined target localization and classification into a regression problem that predicted the label coordinates and labeled class with probabilities. YOLO was first introduced in 2016 with a subsequent version released (Redmon et al. 2016a, Redmon et al. 2016b, Redmon and Farhadi 2018, Bochkovskiy et al. 2020), and most recent, Ultralytic’s PyTorch-based version YOLOv5 (Glenn 2020), claimed to outperform all the previous versions. Therefore, YOLOv5 was implemented in this study to detect the ERC tree trunk.

The YOLOv5 architecture comprised three sections that were described as follows:

Backbone was a Convolutional Neural Network (CNN), which consisted of multiple convolution layers, pooling, and Cross Stage Partial (CSP) bottleneck networks, as shown in Fig. 2. Model backbone extracted the rich information features from an image. CSP network had significantly improved processing time reduction with a deeper network, which addressed duplicate gradient problems. It resulted in reduced model parameters and floating-point operation per second (FLOPS) (Wang et al. 2020). The spatial pyramid pooling (SPP) layers further improved the CNN by removing the constraint on input image size. It converted the feature map of arbitrary size into a standard feature vector. The backbone network generated four layers of feature maps

Neck module consisted of feature aggregation layers to generate the feature pyramid networks (FPN). The feature pyramids were essential for detecting objects at different scales. Hence, it helped the model to perform well on unseen data. A different model implements other feature pyramid techniques. However, YOLOv5 implements the Path Aggregation Network (PANet) to get feature pyramids, and details can be found here (Liu et al. 2018). PANet boosted information flow and helped detect the same object at varying scales and sizes in the image

Head module performed the final object detection on images. It applied the anchor box on three different size feature maps and generated the final output vectors of each map, including class probabilities and bounding boxes.

Fig. 2 YOLOv5 Model architecture

2.5 Model Training

A transfer learning approach was implemented in this study. In transfer learning, an existing model trained on large amounts of data for a particular task and often referred to as a pretrained model was utilized as the initial jumping point for a model performing a similar job. Therefore, transfer learning was relatively fast and easy compared to the training model from scratch. Pretrained models built by deep learning experts and researchers were at the center of transfer learning. YOLOv5 family contained different models, such as YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5XL, which vary in the width and depth of the bottleneck CSP module. These models were trained on the Common Objects in Context (COCO) dataset, containing more than 200,000 labeled images from 80 classes. A pretrained YOLOv5L model was selected to start model training, and pretrained weights were auto-downloaded from the latest YOLOv5 release. A model was trained on 588 training images, and the validation set included 168 images. The validation set was helpful in model building. The following hyper parameters were specified: input image size (640), batch size (16), epochs (200), and pretrained weights (yolov5L.pt). The early stopping criteria were implemented to avoid model overfitting. Model losses (training and validation set) and performance metrics such as precision, average precision, recall, and F1 score of the validation dataset were saved to log files during the training. An independent test set (84 images) was used to evaluate the final model unbiased evaluation. For model training, a web-based online platform called Google Colab was used.

2.6 Model Evaluation Metrics

Evaluation metrics assessed the model performance on the object detection task. Intersection over Union (IoU), precision (P), average precision (AP), recall (R), and F1 score (F1) were the most commonly cited metrics for object detector evaluation in computer vision (Kamilaris and Prenafeta-Boldu 2018). Intersection over Union (IoU) was also known as the »Jaccard-index«” which checks the similarity between two sets. It was a ratio of intersection area to union area of two sets. In object detection, these two sets were predicted and ground truth boxes. The IoU score computed the overlap between these two boxes; the IoU score of zero represented no overlap (0%), whereas the IoU score of one represented 100% overlap. Precision measured the probability of model predicted bounding boxes matching with the ground truth boxes, while recall calculated the likelihood of the model detected ground truth boxes were correct. F1 score measured the balance between precision and recall by computing the harmonic mean between P and R. The precision, recall, and F1 score range from 0 to 1. The higher the precision score, the higher the chances of detected boxes matching the ground truth box. A high recall represented that most ground truth boxes are correctly detected. The equations for computing precision (P), recall (R), and F1 score (F1) were as follows:

(1)

(1)

(2)

(2)

(3)

(3)

Object detection accuracy was assessed by average precision, which totaled the area under the precision-recall curve. It was the average of precision across recall ranging from 0 to 1. AP was computed at different IoU thresholds since each may give a different prediction from the other. The model was evaluated against two different IoU thresholds;

AP@[0.5], denoted AP over the IoU threshold of 0.5.

AP@[0.5:0.95], denoted AP over IoU thresholds ranging from 0.5 to 0.95, with a step size of 0.05.

3. Results and Discussion

The YOLO loss function comprised three parts:

box loss, which computed the bounding box coordinate, located the center of the predicted object, and further compared with the ground truth box

objectness score computed the confidence score of each predicted bounding box and checked if there was an object present in the predicted bounding box

class score checks if a given model can predict the accurate object class and compare it with the ground truth label.

The classification loss was absent since the model was trained on a single class, i.e., a tree trunk. The model losses were recorded with epochs during training as presented in Fig. 3. The box loss on the train and validation sets showed a rapid decline in the initial 40 epochs. The validation set loss plateaued around 50 epochs, and the training loss decreased with the epoch number. This widening gap between train and validation loss showed model overfitting. Similarly, objectness loss for both train and validation sets showed a rapid decline. However, the validation set increased after 50 epochs, indicating possible model overfitting. Early stopping criteria were implemented to avoid model overfitting. The model training was stopped around 190 epochs since no improvement was observed, and the best model weights were saved.

Fig. 3 YOLOv5 Model loss functions: a) Box loss, b) Objectness loss

The model was evaluated on the validation set, and the F1 score, precision, and recall were observed. Model efficiency and effectiveness were measured by precision and recall, respectively, while the F1 score reflected precision and recall, making it a good aggregated indicator. Fig. 4 depicts the different evaluation metrics used in the study. Precision, recall, and F1 score converged after 50 epochs. The high score (around 0.85) for precision, recall, and F1 indicated that most ground truth objects were detected correctly, and no sign of model overfitting on the training data was observed. Moreover, average precision ranged around 0.85 for IoU 0.5 and 0.42 for IoU 0.5:0.95 threshold, which indicated the ideal detector.

Fig. 4 YOLOv5 model results on: a) precision, b) recall, c) F1 score, d) AP@0.5, and e) AP@0.5:0.95

The detector estimated the bounding box coordinates and class labels with corresponding confidence scores, which estimated if an object exists in the predicted box. Fig. 5 shows that opting for the higher values of confidence score improved the model precision score but decreased the recall and vice-versa. The object detector might not be able to give the peak precision and peak recall since there is an inverse relationship between precision and recall. Thus, optimizing the detector for precision and recall becomes essential, and the confidence score was one of the control parameters that optimized both precision and recall.

F1 scores represented the balance between recall and precision and were also helpful in determining the optimum confidence score for a given model. Generally, the model with a higher confidence score was preferred in object detection. The maximum value of the F1 score was 0.86 and was observed for a wide range of confidence scores from 0.10 to 0.6 before rapidly declining to 0, as shown in Fig. 5(c). For the developed tree trunk detector, selecting the confidence score of 0.6 seems to be a reasonable choice, where the F1 score was 0.82, which was relatively high and closer to the maximum of 0.86. At the same time, precision and recall corresponding to a confidence score of 0.6 were around 0.87 and 0.78, respectively. Selecting the confidence score above 0.6 showed that recall started to decrease significantly, and the precision score remained at maximum values, as shown in Fig. 5. Selecting lower values of confidence score (<0.6) would reduce the model precision.

Fig. 5 YOLOv5 evaluation metrics against confidence score: a) precision, b) recall, c) F1 score

After completing the training, the best model weights were saved. The final model was tested on a separate test set, which provided an unbiased final model performance metric such as precision, recall, and average precision. The precision, recall, AP@0.5 and AP@0.5:0.95 were 0.878, 0.843, 0.889 and 0.441, respectively. Fig. 6 shows the tree trunk detected on various images with confidence scores. Moreover, the final model was also used to detect tree trunk on captured video, and the result was available on the following link (https://youtu.be/wtL3XEKLm8c). The model accurately predicts the varying size of a tree trunk and differentiates between trunk and branches. However, natural light conditions and shadows sometimes resulted in no trunk detection. In low light or shadowed regions, the images did not contain enough feature (tree trunk) information for the models to learn, which often created problems. Therefore, capturing the images in various lighting conditions and shadow regions was often recommended, and training the models on diverse datasets. The model trained on such diverse datasets usually offers better performance.

Fig. 6 YOLOv5 model predictions in an actual environment. The detected trunk is shown with a gray bounding box with detection probability

4. Conclusions

This study was targeted toward the development of deep learning-based machine vision techniques to detect the ERC tree trunk in a real-world rangeland environment. A pretrained model, YOLOv5 was selected and trained with a transfer learning approach on the ERC tree trunk dataset. The RGB images were used as input to the pretrained YOLOv5 model to improve the precision and recall of ERC tree trunk detection. The model achieved the precision and recalls of 0.878 and 0.843, respectively, on a test set without overfitting. The developed algorithm accurately predicted the varying tree trunk sizes both in images and video. This study explored the potential of deep learning methods and provided fundamental knowledge that would be an essential first step toward developing an automated fleet of AGVs for ERC cutting operations.

5. References

Amara, J., Bouaziz, B., Algergawy, A., 2017: A deep learning-based approach for banana leaf diseases classification. In Lecture Notes-Informatics (LNI), Gesellschaft fur Informatik, Bonn 2017.

Anadon, J.D., Sala, O.E., Turner, B.L., Bennett, E.M., 2014: Effect of wood-plant encroachment on livestock production in North and South America. Proceedings of the National Academy of Sciences 111(35): 12948–12953. https://doi.org/10.1073/pnas.1320585111

Ansley, R.J., Rasmussen, G.A., 2005: Managing Native Invasive Juniper Species Using Fire. Weed Technology 19(3): 517–522. https://www.jstor.org/stable/3989470

Archer, S.R., Andersen, E.M., Predick, K.I., Schwinning, S., Steidl, R.J., Woods, S.R., 2017: Woody Plant Encroachment: Causes and Consequences. In: Rangeland Systems. Springer Series on Environmental Management. Springer, 25–84. https://doi.org/10.1007/978-3-319-46709-2_2

Atchison, R., Daniels, R., Martinson, E., Stadtlander, M., 2015: Kansas Forest Action Plan. Kansas Forest Service Report.

Badgujar, C., Flippo, D., Badua, S., Baldwin, C., 2023: Development and Evaluation of Pasture Tree Cutting Robot: Proof-of-Concept Study. Croat. j. for. eng. 44(1): 1–11. https://doi.org/10.5552/crojfe.2023.1731

Baker, D., Smidt, M., Mitchell, D., 2017: Biomass chipping for eastern redcedar. In: Proceedings of the 2017 Council on Forest Engineering meeting, »Forest engineering, from where we’ve been, to where we’re going«. Bangor, ME, 1–8.

Bargoti, S., Underwood, J., 2017: Deep Fruit Detection in Orchards. IEEE International Conference on Robotics and Automation, Singapore, May 29 – June 3, 3626–3633. https://doi.org/10.48550/arXiv.1610.03677

Bidwell, T.G., Weir, J.R., Engle, D.M., 2017: Eastern Redcedar Control and Management-Best Management Practices to Restore Oklahoma’s Ecosystems. Extension article, Oklahoma State University, 4 p.

Bochkovskiy, A., Wang, C.-Y., Liao, H.-Y. M., 2020: YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv:2004.10934, 17 p. https://doi.org/10.48550/arXiv.2004.10934

Bragg, T.B., Hulbert, L.C., 1976: Woody Plant Invasion of Unburned Kansas Bluestem Prairie. Journal of Range Management 29(1): 19–24.

Briggs, J.M., Hoch, G.A., Johnson, L.C., 2002a: Assessing the Rate, Mechanisms, and Consequences of the Conversion of Tallgrass Prairie to Juniperus virginiana Forest. Ecosystems 5(6): 578–586. https://www.jstor.org/stable/3658734

Briggs, J.M., Knapp, A.K., Brock, B.L., 2002b: Expansion of Woody Plants in Tallgrass Prairie: A Fifteen-year Study of Fire and Fire-grazing Interactions. The American Midland Naturalist 147(2): 287–294. https://doi.org/10.1674/0003-0031(2002)147[0287:EOWPIT]2.0.CO;2

Chen, S.W., Shivakumar, S.S., Dcunha, S., Das, J., Okon, E., Qu, C., Taylor, C.J., Kumar, V., 2017: Counting Apples and Oranges With Deep Learning: A Data-Driven Approach. IEEE Robotics and Automation Letters 2(2): 781–788. https://doi.org/10.1109/LRA.2017.2651944

Christiansen, P., Nielsen, L., Steen, K., Jørgensen, R., Karstoft, H., 2016: DeepAnomaly: Combining Background Subtraction and Deep Learning for Detecting Obstacles and Anomalies in an Agricultural Field. Sensors 16(11): 1904. https://doi.org/10.3390/s16111904

Coffey, A., 2011: Private Benefits of Eastern Redcedar Management and the Impact of Changing Stocker Value of Gain. MSc thesis, Oklahoma State University, Stillwater, Oklahoma, 1–50.

Coppedge, B.R., Engle, D.M., Fuhlendorf, S.D., Masters, R.E., Gregory, M.S., 2001a: Landscape cover type and pattern dynamics in fragmented southern Great Plains grasslands, USA. Landscape Ecology 16(8):677–690. https://doi.org/10.1023/A:1014495526696

Coppedge, B.R., Engle, D.M., Masters, R.E., Gregory, M.S., 2001b: Avian Response to Landscape Change in Fragmented Southern Great Plains Grasslands. Ecological Applications 11(1): 47–59. https://doi.org/10.2307/3061054

Drake, B., Todd, P., 2002: A Strategy for Control and Utilization of Invasive Juniper Species in Oklahoma. Final Report of the »Redcedar Task Force«, Oklahoma Dept. of Agriculture, Food and Forestry, 1–54.

Dyrmann, M., Karstoft, H., Midtiby, H., 2016: Plant species classification using deep convolutional neural network. Biosystems Engineering 151: 72–80. https://doi.org/10.1016/j.biosystemseng.2016.08.024

Engle, D., 1996: A decision support system for designing juniper control treatments. AI Applications 10: 1–11.

Engle, D., Coppedge, B., Fuhlendorf, S., 2008: From the Dust Bowl to the Green Glacier: Human Activity and Environmental Change in Great Plains Grasslands. In Book: Western North American Juniperus Communities: A Dynamic Vegetation Type, Part of the Ecological Studies book series, Springer, 253–271. https://doi.org/10.1007/978-0-387-34003-6_14

Fuhlendorf, S., Limb, R.F., Engle, D., Miller, R., 2011: Assessment of Prescribed Fire as a Conservation Practice. In: Conservation Benefits of Rangeland Practices. Natural Resources Conservation Service, United States Department of Agriculture, 77–104.

Gao, F., Fu, L., Zhang, X., Majeed, Y., Li, R., Karkee, M., Zhang, Q., 2020: Multi-class fruit-on- plant detection for apple in snap system using faster R-CNN. Computers and Electronics in Agriculture 176: 105634. https://doi.org/10.1016/j.compag.2020.105634

Gehring, J.L., Bragg, T.B., 1992: Changes in Prairie Vegetation under Eastern Red Cedar (Juniperus virginiana L.) in an Eastern Nebraska Bluestem Prairie. American Midland Naturalist 128(2): 209–217. https://doi.org/10.2307/2426455

Glenn, J., 2020: ultralytics/yolov5:v3.1 – Bug Fixes and Performance Improvements. https://doi.org/10.5281/zendo.4154370

Grinblat, G., Uzal, L., Larese, M., Granitto, P., 2016: Deep learning for plant identification using vein morphological patterns. Computers and Electronics in Agriculture 127: 418–424. https://doi.org/10.1016/j.compag.2016.07.003

Hall, D., McCool, C., Dayoub, F., Sunderhauf, N., Upcroft, B., 2015: Evaluation of Features for Leaf Classification in Challenging Conditions. In: IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2015, 797–804. https://doi.org/10.1109/WACV.2015.111

Harr, R.N., Wright Morton, L., Rusk, S.R., Engle, D.M., Miller, J.R., Debinski, D., 2014: Landowners’ perceptions of risk in grassland management: woody plant encroachment and prescribed fire. Ecology and Society 19(2): 41. https://www.jstor.org/stable/26269548

Horncastle, V.J., Hellgren, E.C., Mayer, P.M., Ganguli, A.C., Engle, D.M., Leslie, D.M., 2005: Implications of invasion by Juniperus Virginiana on small mammals in the Southern great plains. Journal of Mammalogy 86(6): 1144–1155. https://doi.org/10.1644/05-MAMM-A-015R1.1

Kamilaris, A., Prenafeta-Boldú, F.X., 2018: Deep learning in agriculture: A survey. Computers and Electronics in Agriculture 147: 70–90. https://doi.org/10.1016/j.compag.2018.02.016

Kestur, R., Meduri, A., Narasipura, O., 2019: Mangonet: A deep semantic segmentation architecture for a method to detect and count mangoes in an open orchard. Engineering Applications of Artificial Intelligence 77: 59–69. https://doi.org/10.1016/j.engappai.2018.09.011

Knapp, A.K., McCarron, J.K., Silletti, A.M., Hoch, G.A., Heisler, J.C., Lett, M.S., Blair, J.M., Briggs, J.M., Smith, M.D., 2008: Ecological Consequences of the Replacement of Native Grassland by Juniperus virginiana and Other Woody Plants. In book: Western North American Juniperus Communities, Springer, New York, 156–169. https://doi.org/10.1007/978-0-387-34003-6_8

Knezevic, S.Z., Melvin, S., Gompert, T., 2005: EC05-186 Integrated Management of Eastern Redcedar. University of Nebraska, Lincoln Extension, 9 p.

LeCun, Y., Bengio, Y., Hinton, G., 2015: Deep learning. Nature 521: 436–444. https://doi.org/10.1038/nature14539

Lee, S.H., Chan, C.S., Wilkin, P., Remagnino, P., 2015: Deep-plant: Plant identification with convolutional neural networks. In 2015 IEEE International Conference on Image Processing (ICIP), 452–456. https://doi.org/10.1109/ICIP.2015.7350839

Liang, Q., Zhu, W., Long, J., Wang, Y., Sun, W., Wu, W., 2018: A Real-Time Detection Framework for On-Tree Mango Based on SSD Network. In Proceedings of 11th International Conference, ICIRA 2018, Newcastle, NSW, Australia, August 9–11, Part II, 423–436. https://doi.org/10.1007/978-3-319-97589-4_36

Liu, S., Qi, L., Qin, H., Shi, J., Jia, J., 2018: Path Aggregation Network for Instance Segmentation. In 2018 IEEE/CVF Conference on Computer Vision & Pattern Recognition, Salt Lake City, 8759–8768.

Liu, Z., Feng, Y., Li, R., Zhang, S., Zhang, L., Cui, G., Ahmad, A.-M., Fu, L., Cui, Y., 2019: Improved kiwifruit detection using VGG16 with RGB and NIR information fusion. American Society of Agricultural and Biological Engineers-2019 Boston, Massachusetts, July 7–10. https://doi.org/10.13031/aim.201901260

Majeed, Y., Zhang, J., Zhang, X., Fu, L., Karkee, M., Zhang, Q., Whiting, M.D., 2020: Deep learning based segmentation for automated training of apple trees on trellis wires. Computers and Electronics in Agriculture 170: 105277. https://doi.org/10.1016/j.compag.2020.105277

Meneguzzo, D.M., Liknes, G.C., 2015: Status and Trends of Eastern Redcedar (Juniperus virginiana) in the Central United States: Analyses and Observations Based on Forest Inventory and Analysis Data. Journal of Forestry 113(3): 325–334. https://doi.org/10.5849/jof.14-093

Mitchell, D., Klepac, J., 2014: Harvesting considerations for ecosystem restoration projects. In: Proceedings of the Global Harvesting Technology, 37th Council on Forest Engineering Annual Meeting, Moline, Illinois, 7 p.

Morrison, O., 2016: Sweat and luck: How firefighters fought the biggest Kansas fire in a century. Wichita Eagle.

Natural Resources Conservation Service, 2010: National resources inventory rangeland resource assessment-native invasive woody species. Technical report, Natural Resources Conservation Service, USDA.

Natural Resources Conservation Service, 2014: Brush management design proceedures 314P. Natural Resources Conservation Service Report, Nebraska.

Nebraska Forest Service, 2016: Eastern redcedar in Nebraska: Problems and opportunities. Nebraska Conservation Round table, Issue Paper 1.

Noden, B.H., Dubie, T., 2017: Involvement of invasive eastern red cedar (Juniperus virginiana) in the expansion of Amblyomma americanum in Oklahoma. Journal of Vector Ecology 42(1): 178–183. https://doi.org/10.1111/jvec.12253

Oklahoma Forestry Service, 2014: Eastern Redcedar Frequently asked questions – Oklahoma Forestry Service.

Ortmann, J., Stubbendieck, J., Masters, R.A., Pfeiffer, G.H., Bragg, T.B., 1998: Efficacy and costs of controlling eastern redcedar. Publications from USDA-ARS / UNL Faculty 1072.

Ratajczak, Z., Nippert, J.B., Collins, S.L., 2012: Woody encroachment decreases diversity across North American grasslands and savannas. Ecology 93(4): 697–703. https://doi.org/10.1890/11-1199.1

Redmon, J., Divvala, S., Girshick, R., Farhadi, A., 2016a: You Only Look Once: Unified, Real-Time Object Detection. arXiv:1506.02640 [cs].

Redmon, J., Divvala, S., Girshick, R., Farhadi, A., 2016b: You Only Look Once: Unified, Real-Time Object Detection. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/CVPR.2016.91

Redmon, J., Farhadi, A., 2018: YOLOv3: An Incremental Improvement. arXiv:1804.02767 [cs]. arXiv: 1804.02767

Sa, I., Ge, Z., Dayoub, F., Upcroft, B., Perez, T., McCool, C., 2016: DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 16(8): 1222. https://doi.org/10.3390/s16081222

Simonsen, V.L., Fleischmann, J.E., Whisenhunt, D.E., Volesky, J.D., Twidwell, D., 2015: Act now or pay later: Evaluating the cost of reactive versus proactive eastern redcedar management. Extension aricles, University of Nebraska–Lincoln Extension.

Sladojevic, S., Arsenovic, M., Anderla, A., Culibrk, D., Stefanovic, D., 2016: Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Computational Intelligence and Neuroscience, 1–11. https://doi.org/10.1155/2016/3289801

Starr, M., Joshi, O., Will, R.E., Zou, C.B., 2019: Perceptions regarding active management of the Cross-timbers forest resources of Oklahoma, Texas, and Kansas: A SWOT-ANP analysis. Land Use Policy 81: 523–530. https://doi.org/10.1016/j.landusepol.2018.11.004

Symstad, A.J., Leis, S.A., 2017: Woody Encroachment in Northern Great Plains Grasslands: Perceptions, Actions, and Needs. Natural Areas Journal 37(1): 118–127. https://doi.org/10.3375/043.037.0114

Taylor, C.A., 2008: Ecological Consequences of Using Prescribed Fire and Herbivory to Manage Juniperus Encroachment. In Book: Western North American Juniperus Communities, Springer, New York, 239–252. https://doi.org/10.1007/978-0-387-34003-6_13

Tian, Y., Yang, G., Wang, Z., Wang, H., Li, E., Liang, Z., 2019: Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Computers and Electronics in Agriculture 157: 417–426. https://doi.org/10.1016/j.compag.2019.01.012

Twidwell, D., Fuhlendorf, S.D., Engle, D.M., Taylor, C.A., 2009: Surface Fuel Sampling Strategies: Linking Fuel Measurements and Fire Effects. Rangeland ecology & management 62(3): 223–229. https://doi.org/10.2111/08-124R2.1

Twidwell, D., Allred, B.W., Fuhlendorf, S.D., 2013a: National-scale assessment of ecological content in the world’s largest land management framework. Ecosphere 4(8): 94. https://doi.org/10.1890/ES13-00124.1

Twidwell, D., Fuhlendorf, S.D., Taylor, C.A., Rogers, W.E., 2013b: Refining thresholds in coupled fire–vegetation models to improve management of encroaching woody plants in grasslands. Journal of Applied Ecology 50(3): 603–613. https://doi.org/10.1111/1365-2664.12063

University of Nebraska Lincoln, 2022: Eastern Redcedar Science Literacy Project- FAQ for Scientistis. Available online: https://agronomy.unl.edu/eastern-redcedar-science-literacy-project/faqs.

Utstumo, T., Urdal, F., Brevik, A., Dorum, J., Netland, J., Overskeid, O., Berge, T.W., Gravdahl, J.T., 2018: Robotic in-row weed control in vegetables. Computers and Electronics in Agriculture 154: 36–45. https://doi.org/10.1016/j.compag.2018.08.043

Van Auken, A.O., 2009: Causes and consequences of woody plant encroachment into western North American grasslands. Journal of Environmental Management 90(10): 2931–2942. https://doi.org/10.1016/j.jenvman.2009.04.023

Wang, C.Y., Mark Liao, H.Y., Wu, Y.H., Chen, P.Y., Hsieh, J.W., Yeh, I.H., 2020: CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 1571–1580.

Wang, J., Xiao, X., Qin, Y., Doughty, R.B., Dong, J., Zou, Z., 2018: Characterizing the encroachment of juniper forests into sub-humid and semi-arid prairies from 1984 to 2010 using PALSAR and Landsat data. Remote Sensing of Environment 205: 166–179. https://doi.org/10.1016/j.rse.2017.11.019

Zhang, J., He, L., Karkee, M., Zhang, Q., Zhang, X., Gao, Z., 2018: Branch detection for apple trees trained in fruiting wall architecture using depth features and regions-convolutional neural network (R-CNN). Computers and Electronics in Agriculture 155: 386–393. https://doi.org/10.1016/j.compag.2018.10.029

Zhang, X., Karkee, M., Zhang, Q., Whiting, M.D., 2021: Computer vision-based tree trunk and branch identification and shaking points detection in Dense-Foliage canopy for automated harvesting of apples. Journal of Field Robotics 38(3): 476–493. https://doi.org/10.1002/rob.21998

Zou, C.B., Twidwell, D., Bielski, C.H., Fogarty, D.T., Mittelstet, A.R., Starks, P.J., Will, R.E., Zhong, Y., Acharya, B.S., 2018: Impact of Eastern Redcedar Proliferation on Water Resources in the Great Plains USA-Current State of Knowledge. Water 10(12): 1768. https://doi.org/10.3390/w10121768

© 2023 by the authors. Submitted for possible open access publication under the

terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Authors' addresses:

Chetan Badgujar, PhD *

e-mail: chetan19@ksu.edu

Kansas State University

Biological and Agricultural Engineering

Seaton 1037A, 920 N. Martin Luther King Jr. Drive

Manhattan, KS 66506

USA

Daniel Flippo, PhD

e-mail: dkflippo@ksu.edu

Kansas State University

Biological and Agricultural Engineering

Seaton Hall 1033, 920 N. Martin Luther King Jr. Drive

Manhattan, KS 66506

USA

Sujith Gunturu, MSc

e-mail: sguntur@ksu.edu

Kansas State University

Computer Science

Seaton 1037A, 920 N. Martin Luther King Jr. Drive

Manhattan, KS 66506

USA

Assoc. prof. Carolyn Baldwin, PhD

e-mail: carolbaldwin@ksu.edu

Kansas State University

Agriculture, Natural Resources and Community Vitality

Umberger 103, 1612 Claflin

Manhattan, Kansas 66506

USA

* Corresponding author

Received: February 04, 2022

Accepted: October 16, 2022

Original scientific paper